A new EU regulation mandates that all plastic bottle caps must remain tethered to drink bottles after opening to reduce waste. While well-intentioned, eliminating twist off caps has led to consumer frustration, as many people find the new caps cumbersome and prone to causing spills. This situation exemplifies how regulatory actions, even with noble goals, can create unintended challenges. The parallel with AI regulation is clear: Overreach, however well-meaning, often stifles innovation and complicates execution.

Regulatory Challenges in the EU

The EU’s approach to AI governance mirrors this dynamic. With the AI Act entering force on August 1, 2024, and enforcement beginning in August 2026, the EU aimed to position itself as a global leader in AI regulation. However, its complementary AI Liability Directive, which aimed to establish a framework for holding parties responsible for harm caused by artificial intelligence systems, faced significant industry backlash and was withdrawn on February 12, 2025. This abrupt reversal highlights the difficulty of balancing innovation with oversight — a challenge the EU is still grappling with.

The AI Act classifies AI-driven medical devices as high-risk, requiring strict compliance with transparency, explainability and risk management protocols. While these measures aim to ensure safety, they also create significant hurdles for developers. The withdrawal of the AI Liability Directive has further complicated the landscape, leaving liability rules fragmented across EU member states. This regulatory uncertainty risks deterring investment and stalling innovation, as companies navigate varying national laws and prolonged approval cycles.

Despite the withdrawal, the EU’s regulatory environment remains stringent. AI-driven medical devices must still adhere to rigorous governance structures and ongoing compliance checks. Many initially viewed the withdrawal as a move toward deregulation, but this is far from the case. The lack of harmonized liability rules across member states has created a patchwork of requirements, making it difficult for companies to achieve broad market adoption.

Adaptive Approach in the U.S.

In contrast, the U.S. has embraced a more flexible regulatory model, particularly in the realm of AI-driven medical devices. FDA’s Predetermined Change Control Plan (PCCP), finalized on December 4, 2024, allows companies to implement pre-approved AI updates without repeated regulatory submissions. This framework fosters continuous innovation, enabling technologies like AI-powered surgical tools and diagnostic systems to evolve in real time.

For example, AI-enhanced imaging devices from companies like Aidoc and Zebra Medical Vision can detect early signs of diseases such as cancer, heart disease and neurological disorders from radiographs, MRIs and CT scans. This capability for early detection is crucial for timely interventions and better patient prognoses.

Meanwhile, the EU’s rigorous compliance requirements and fragmented regulatory landscape have led to prolonged approval timelines, limiting patients from receiving the latest advancements in healthcare while deterring medical investment and innovation in the EU.

Regulatory Divergence

The regulatory divide between the EU and the U.S. has significant commercial implications. U.S. firms benefit from a streamlined approval process that supports iterative AI improvements, reducing delays and enhancing market competitiveness. Meanwhile, EU-based companies face a fragmented regulatory environment, with varying liability laws and stricter compliance requirements. This disparity places EU firms at a distinct disadvantage, potentially driving investment and innovation to more accommodating markets.

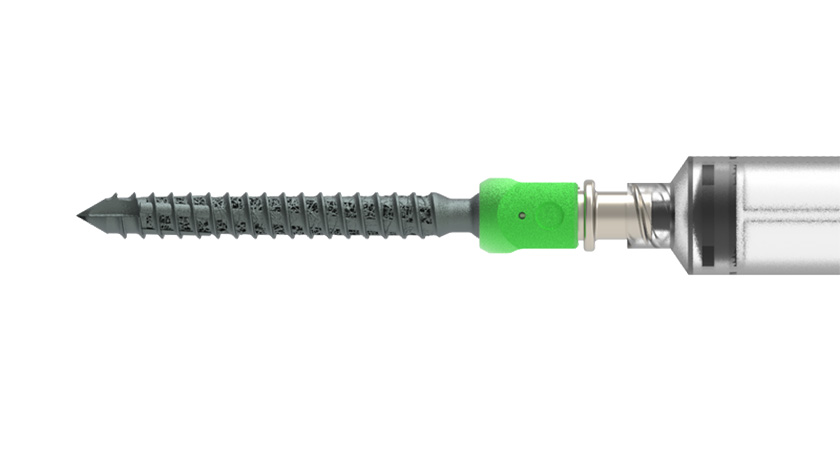

At Orthofix, we have seen firsthand how a more adaptive regulatory framework enables innovation in AI-driven surgical tools. Our Orthonext orthopaedics planning software, developed within a regulatory environment that allows iterative improvements, leverages advanced imaging and real-time data analysis to automatically identify and measure fiducials in a patient’s pre-operative x-ray. This workflow reduces the time for surgical planning while also reducing human error.

By integrating AI into our platform, we provide surgeons with a reliable tool that supports precision, enhances procedural consistency and is well-positioned for safe and effective surgical application. Our experience highlights the benefits of regulatory models that encourage responsible innovation, ensuring that AI-driven advancements in surgery are both effective and commercially viable.

Balancing Innovation and Safety

Regulatory policies directly affect patient outcomes. In the U.S., FDA’s PCCP framework enables rapid integration of advancements in AI-driven medical devices, allowing patients earlier access to cutting-edge technologies. For instance, AI-powered imaging systems can detect abnormalities in medical scans much faster than human eyes, enabling earlier diagnosis and treatment.

In contrast, the EU’s more cautious regulatory approach may delay the availability of innovative AI-driven medical devices. While this ensures comprehensive safety evaluations, it can result in patients waiting longer for potentially life-saving technologies. This trade-off between innovation speed and safety assurance is a critical consideration in regulatory policy development.

The Future of AI in Medical Innovation

The debate over AI regulation is no longer theoretical; it is a defining factor in the future of medical technology. The U.S. has embraced a model that enables continuous AI evolution, while the EU’s stringent frameworks create barriers that hinder innovation.

As AI continues to advance, companies that navigate these regulatory landscapes strategically will emerge as leaders.

To remain competitive, the EU must reconsider its approach, embracing flexibility without compromising safety. Meanwhile, the U.S. must ensure that recent changes within its regulatory agencies do not introduce new bottlenecks that could counteract the progress made through adaptive frameworks like FDA’s PCCP.

With key personnel shifts and restructuring efforts underway, maintaining a commitment to efficiency and innovation will be critical to sustaining the U.S.’s leadership in medical AI. The balance between innovation and oversight will define the success of AI in healthcare, and those who align compliance with opportunity will set the pace for AI-powered medical innovation globally.

FDA Layoffs

Recent shifts within FDA highlight the ongoing evolution of regulatory philosophies. As this article neared completion, FDA announced unexpected staffing cuts, with the Center for Devices and Radiological Health (CDRH) hit the hardest. Given the focus of this column on transatlantic regulatory differences, it would be remiss not to acknowledge this development.

While the full implications remain uncertain, this moment of transition raises concerns about potential delays in regulatory reviews but also underscores the agency’s commitment to modernizing its approach by prioritizing efficiency and adaptability in overseeing AI-driven medical technologies. As both the U.S. and the EU continue to navigate the balance between rigorous oversight and fostering innovation, the evolving situation at the FDA serves as a timely reminder of the complexities involved in shaping effective regulatory frameworks.

Dr. Erturan is Director – Global Lead Clinical & Medical Affairs at Orthofix and member of the Orthopaedic Surgical Manufacturers Association (OSMA).

Dr. Standish is Chief Enabling Technology Officer at Orthofix.